Difference between revisions of "Kaustubh Shivdikar"

m (Papers added) (Tag: Visual edit) |

(Ph.D. thesis added) (Tag: Visual edit) |

||

| (51 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

*GPU Kernel Design | *GPU Kernel Design | ||

| − | Contact: shivdikar.k [at] northeastern [dot] edu | + | Contact: |

| + | |||

| + | *shivdikar.k [at] northeastern [dot] edu | ||

| + | *mail [at] kaustubh [dot] us | ||

[https://www.researchgate.net/profile/Kaustubh-Shivdikar ResearchGate] [https://scholar.google.com/citations?user=NCTXsGMAAAAJ&hl=en&oi=ao Google Scholar] | [https://www.researchgate.net/profile/Kaustubh-Shivdikar ResearchGate] [https://scholar.google.com/citations?user=NCTXsGMAAAAJ&hl=en&oi=ao Google Scholar] | ||

<br /> | <br /> | ||

| + | |||

| + | ======[[https://wiki.kaustubh.us/w/img_auth.php/resume.pdf Resume]]====== | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

| − | <br /> | + | <br /><br /> |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | <br /> | ||

====Education==== | ====Education==== | ||

| − | *PhD - Computer Engineering, Northeastern University [ | + | *PhD - Computer Engineering, Northeastern University [May 2024] |

*MS - Electrical and Computer Engineering, Northeastern University [May 2021] | *MS - Electrical and Computer Engineering, Northeastern University [May 2021] | ||

*BS - Electrical Engineering, Veermata Jijabai Technological Institute [May 2016] | *BS - Electrical Engineering, Veermata Jijabai Technological Institute [May 2016] | ||

| Line 45: | Line 37: | ||

*''Summer-Fall 2018 Coop:'' Mobile Robotics @ [https://www.adept.com/home/?region=us Omron Adept] with [https://www.linkedin.com/in/georgevpaul/ George Paul]. | *''Summer-Fall 2018 Coop:'' Mobile Robotics @ [https://www.adept.com/home/?region=us Omron Adept] with [https://www.linkedin.com/in/georgevpaul/ George Paul]. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<br /> | <br /> | ||

<br /> | <br /> | ||

| Line 68: | Line 47: | ||

*June 2022: Mentored Lina Adkins for the GNN Acceleration project at [https://stem.northeastern.edu/summer/reu/pathways/students/ REU-Pathways] program | *June 2022: Mentored Lina Adkins for the GNN Acceleration project at [https://stem.northeastern.edu/summer/reu/pathways/students/ REU-Pathways] program | ||

| − | *May 2022: Served as '''Submission chair''' for [https://hpca-conf.org/2023/ HPCA 2023] conference. | + | *May 2022: Served as '''Submission co-chair''' for [https://hpca-conf.org/2023/ HPCA 2023] conference. |

*Jan 2020: '''Taught''' the GPU Programming Course at NEU | *Jan 2020: '''Taught''' the GPU Programming Course at NEU | ||

*April 2019: '''[https://coe.northeastern.edu/news/congratulations-rise2019-winners/ Graduate Innovator Award]''' at the RISE 2019 Research Expo for our poster Pi-Tiles | *April 2019: '''[https://coe.northeastern.edu/news/congratulations-rise2019-winners/ Graduate Innovator Award]''' at the RISE 2019 Research Expo for our poster Pi-Tiles | ||

| − | *April 2018: '''Best Poster Award''' at the RISE 2018 Research Expo for our poster The Prime Hexagon | + | *April 2018: '''[https://coe.northeastern.edu/news/ece-students-win-best-poster-at-2018-rise-expo/ Best Poster Award]''' at the RISE 2018 Research Expo for our poster The Prime Hexagon |

*Nov 2018: Mentored the NEU team for '''[https://www.mghpcc.org/cluster-racing-at-sc18/ Student Cluster Contest]''' at Super Computing Conference 2018 | *Nov 2018: Mentored the NEU team for '''[https://www.mghpcc.org/cluster-racing-at-sc18/ Student Cluster Contest]''' at Super Computing Conference 2018 | ||

*Nov 2017: Joined the NEU Team for '''Student Cluster Contest''' at Super Computing Conference 2017 | *Nov 2017: Joined the NEU Team for '''Student Cluster Contest''' at Super Computing Conference 2017 | ||

| Line 80: | Line 59: | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | ==Publications== | ||

| + | [[File:neuracore.png|right|frameless|76x76px|NeuraCore Image]] | ||

| + | |||

| + | ======[https://wiki.kaustubh.us/w/img_auth.php/NeuraChip_GNN_Accelerator.pdf NeuraChip: Accelerating GNN Computations with a Hash-based Decoupled Spatial Accelerator]====== | ||

| + | {{Nutshell|Decoupled multiplication and addition operations to speedup GNNs.|title=NeuraChip}} | ||

| + | |||

| + | (ISCA 2024) [[https://wiki.kaustubh.us/w/img_auth.php/NeuraChip_GNN_Accelerator.pdf PDF]] [[https://www.researchgate.net/publication/380127919_NeuraChip_Accelerating_GNN_Computations_with_a_Hash-based_Decoupled_Spatial_Accelerator RG]] [Slides] [[https://github.com/NeuraChip/neurachip GitHub]] [[https://neurachip.us/ Website]] | ||

| + | |||

| + | ==.== | ||

| + | [[File:gpu icon.png|right|frameless|93x93px]] | ||

| + | |||

| + | ======[https://wiki.kaustubh.us/w/img_auth.php/Graph_Accelerator_Thesis.pdf Enabling Accelerators for Graph Computing]====== | ||

| + | {{Nutshell|Software and Hardware enhancements for GNNs|title=Thesis}} | ||

| + | |||

| + | (Ph.D. Thesis) [[https://wiki.kaustubh.us/w/img_auth.php/scalability_limitations.pdf PDF]] | ||

| + | ==.== | ||

| + | [[File:PIM Logo.png|alt=Scalability Limitations of Processing-in-Memory using Real System Evaluations|right|frameless|83x83px]] | ||

| + | |||

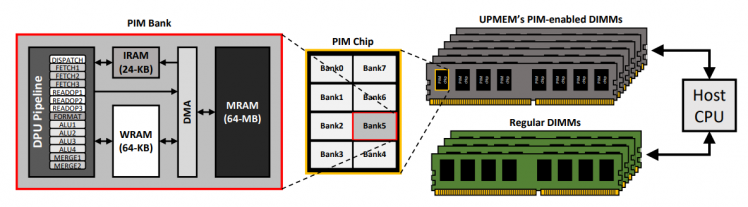

| + | ======[https://wiki.kaustubh.us/w/img_auth.php/scalability_limitations.pdf Scalability Limitations of Processing-in-Memory using Real System Evaluations]====== | ||

| + | {{Nutshell|Suggest interconnects for PIM nodes to enable efficient all-to-all communication.|title=Paper}} | ||

| + | |||

| + | ([https://dl.acm.org/doi/abs/10.1145/3639046 ACM SIGMETRICS 2024]) [[https://wiki.kaustubh.us/w/img_auth.php/scalability_limitations.pdf PDF]] [[https://www.researchgate.net/publication/378386110_Scalability_Limitations_of_Processing-in-Memory_using_Real_System_Evaluations RG]] | ||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | |+Abstract | ||

| + | !Abstract | ||

| + | |- | ||

| + | |Processing-in-memory (PIM), where the compute is moved closer to the memory or the data, has been widely explored to accelerate emerging workloads. Recently, different PIM-based systems have been announced by memory vendors to minimize data movement and improve performance as well as energy efficiency. One critical component of PIM is the large amount of compute parallelism provided across many PIM “nodes” or the compute units near the memory. In this work, we provide an extensive evaluation and analysis of real PIM systems based on UPMEM PIM. We show that while there are benefits of PIM, there are also scalability challenges and limitations as the number of PIM nodes increases. In particular, we show how collective communications that are commonly found in many kernels/workloads can be problematic for PIM systems. To evaluate the impact of collective communication in PIM architectures, we provide an in-depth analysis of two workloads on the UPMEM PIM system that utilize representative common collective communication patterns – AllReduce and All-to-All communication. Specifically, we evaluate 1) embedding tables that are commonly used in recommendation systems that require AllReduce and 2) the Number Theoretic Transform (NTT) kernel which is a critical component of Fully Homomorphic Encryption (FHE) that requires All-to-All communication. We analyze the performance benefits of these workloads and show how they can be efficiently mapped to the PIM architecture through alternative data partitioning. However, since each PIM compute unit can only access its local memory, when communication is necessary between PIM nodes (or remote data is needed), communication between the compute units must be done through the host CPU, thereby severely hampering application performance. To increase the scalability (or applicability) of PIM to future workloads, we make the case for how future PIM architectures need efficient communication or interconnection networks between the PIM nodes that require both hardware and software support. | ||

| + | |- | ||

| + | |[[File:Scaling PIM.png|frameless|748x748px]] | ||

| + | |- | ||

| + | |'''Authors''': Gilbert Jonatan, Haeyoon Cho, Hyojun Son, Xiangyu Wu, Neal Livesay, Evelio Mora, '''Kaustubh Shivdikar''', José L. Abellán, Ajay Joshi, David Kaeli, John Kim | ||

| + | |} | ||

| + | |||

| + | ==.== | ||

| + | [[File:network logo.png|alt=GNN Logo|right|frameless|82x82px|GNN Logo]] | ||

| + | |||

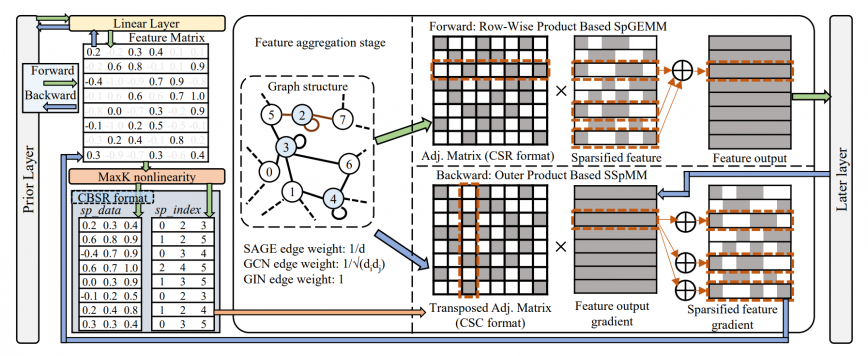

| + | ======[https://wiki.kaustubh.us/w/img_auth.php/maxk.pdf MaxK-GNN: Towards Theoretical Speed Limits for Accelerating Graph Neural Networks Training]====== | ||

| + | {{Nutshell|Presented MaxK non-linearity as a universal approximator to speedup GNN training|title=Paper}} | ||

| + | |||

| + | (ASPLOS 2024) [[https://wiki.kaustubh.us/w/img_auth.php/maxk.pdf PDF]] [[https://github.com/harveyp123/MaxK-GNN GitHub]] [RG] | ||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | |+Abstract | ||

| + | !Abstract | ||

| + | |- | ||

| + | |In the acceleration of deep neural network training, the graphics processing unit (GPU) has become the mainstream platform. GPUs face substantial challenges on Graph Neural Networks (GNNs), such as workload imbalance and memory access irregularities, leading to underutilized hardware. Existing solutions such as PyG, DGL with cuSPARSE, and GNNAdvisor frameworks partially address these challenges. However, the memory traffic involved with Sparse-Dense Matrix Matrix Multiplication (SpMM) is still significant. We argue that drastic performance improvements can only be achieved by the vertical optimization of algorithm and system innovations, rather than treating the speedup optimization as an "after-thought" (i.e., (i) given a GNN algorithm, designing an accelerator, or (ii) given hardware, mainly optimizing the GNN algorithm). In this paper, we present MaxK-GNN, an advanced high-performance GPU training system integrating algorithms and system innovation. (i) We introduce the MaxK nonlinearity and provide a theoretical analysis of MaxK nonlinearity as a universal approximator, and present the Compressed Balanced Sparse Row (CBSR) format, designed to store the data and index of the feature matrix after nonlinearity; (ii) We design a coalescing enhanced forward computation with row-wise product-based Sparse Matrix-Matrix Multiplication (SpGEMM) Kernel using CBSR for input feature matrix fetching and strategic placement of a sparse output accumulation buffer in shared memory; (iii) We develop an optimized backward computation with outer product-based and Sampled Sparse Matrix Dense Matrix Multiplication (SSpMM) Kernel. We conduct extensive evaluations of MaxK-GNN and report the end-to-end system run-time. Experiments show that our MaxK-GNN system could approach the theoretical speedup limit according to Amdahl’s law. We achieve comparable accuracy to existing GNNs, but at a significantly increased speed: 3.22×/4.24× speedup (vs. theoretical limits, 5.52×/7.27×) on Reddit compared to DGL and GNNAdvisor implementations. Our implementation can be found on GitHub | ||

| + | |- | ||

| + | |[[File:MaxK GNN Operations.png|frameless|868x868px]] | ||

| + | Training dataflow of single MaxK-based GNN layer. In the backward computation, the transposed CSC format is equal to original CSR format. | ||

| + | |- | ||

| + | |'''Authors''': Hongwu Peng, Xi Xie, Kaustubh Shivdikar, MD Amit Hasan, Shaoyi Huang, Omen Khan, Caiwen Ding, David Kaeli | ||

| + | |} | ||

| + | |||

| + | ==.== | ||

| + | [[File:AMD MI100 GPU.png|right|frameless|226x226px|AMD MI100 GPU]] | ||

| + | |||

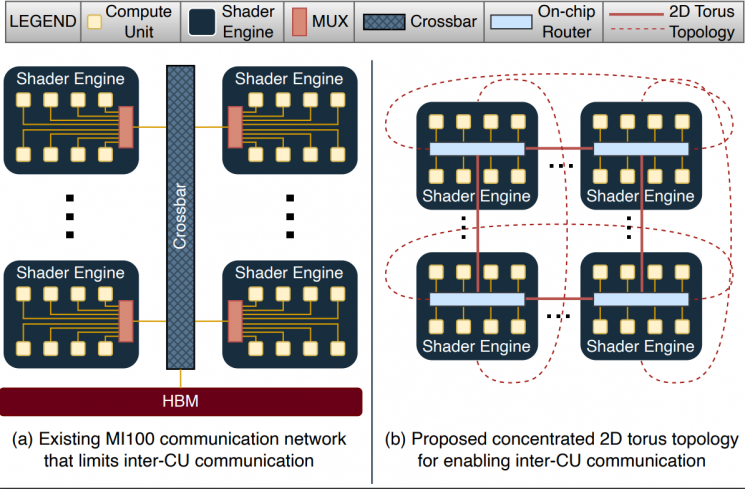

| + | ======[https://wiki.kaustubh.us/w/img_auth.php/GME.pdf GME: GPU-based Microarchitectural Extensions to Accelerate Homomorphic Encryption]====== | ||

| + | {{Nutshell|Enhanced the AMD MI-100 GPU on-chip network to accelerate Homomorphic Encryption.|title=GME}} | ||

| + | |||

| + | ([https://doi.org/10.1145/3613424.3614279 IEEE/ACM MICRO 2023]) [[https://wiki.kaustubh.us/w/img_auth.php/GME.pdf PDF]] [[https://www.researchgate.net/publication/374059839_GME_GPU-based_Microarchitectural_Extensions_to_Accelerate_Homomorphic_Encryption RG]] [[https://wiki.kaustubh.us/w/img_auth.php/GME_MICRO_2023_Slides.pdf Slides]] | ||

| + | |||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | |+Abstract | ||

| + | !Abstract | ||

| + | |- | ||

| + | |Fully Homomorphic Encryption (FHE) enables the processing of encrypted data without decrypting it. | ||

| + | |||

| + | FHE has garnered significant attention over the past decade as it supports secure outsourcing of data processing to remote cloud services. | ||

| + | |||

| + | Despite its promise of strong data privacy and security guarantees, FHE introduces a slowdown of up to five orders of magnitude as compared to the same computation using plaintext data. | ||

| + | |||

| + | This overhead is presently a major barrier to the commercial adoption of FHE. | ||

| + | |||

| + | In this work, we leverage GPUs to accelerate FHE, capitalizing on a well-established GPU ecosystem available in the cloud. | ||

| + | |||

| + | We propose GME, which combines three key microarchitectural extensions along with a compile-time optimization to the current AMD CDNA GPU architecture. | ||

| + | |||

| + | First, GME integrates a lightweight on-chip compute unit (CU)-side hierarchical interconnect to retain ciphertext in cache across FHE kernels, thus eliminating redundant memory transactions. | ||

| + | |||

| + | Second, to tackle compute bottlenecks, GME introduces special MOD-units that provide native custom hardware support for modular reduction operations, one of the most commonly executed sets of operations in FHE. | ||

| + | |||

| + | Third, by integrating the MOD-unit with our novel pipelined 64-bit integer arithmetic cores (WMAC-units), GME further accelerates FHE workloads by 19%. | ||

| + | |||

| + | Finally, we propose a Locality-Aware Block Scheduler (LABS) that exploits the temporal locality available in FHE primitive blocks. | ||

| + | |||

| + | Incorporating these microarchitectural features and compiler optimizations, we create a synergistic approach achieving average speedups of 796x, 14.2x, and 2.3x over Intel Xeon CPU, NVIDIA V100 GPU, and Xilinx FPGA implementations, respectively. | ||

| + | |- | ||

| + | |[[File:GME NOC.png|frameless|745x745px]] | ||

| + | |- | ||

| + | |Authors: '''[[Kaustubh Shivdikar]]''', [https://scholar.google.com/citations?user=huCqT6IAAAAJ&hl=en&oi=ao Yuhui Bao], [https://www.linkedin.com/in/rashmi-agrawal-9a0601133/ Rashmi Agrawal], Michael Shen, [https://ieeexplore.ieee.org/author/37088654483 Gilbert Jonatan], [https://eveliomora.es/ Evelio Mora], Alexander Ingare, [https://neallivesay.github.io/ Neal Livesay], [https://sites.google.com/ucam.edu/jlabellan/ José L. Abellán], [http://icn.kaist.ac.kr/~jjk12/ John Kim], [https://www.bu.edu/eng/profile/ajay-joshi/ Ajay Joshi], [https://ece.northeastern.edu/fac-ece/kaeli.html David Kaeli] | ||

| + | |} | ||

| + | |||

| + | ==.== | ||

| + | [[File:V100 GPU.png|right|frameless|168x168px]] | ||

| + | |||

| + | ======[https://wiki.kaustubh.us/w/img_auth.php/FHE_IEEE_Micro.pdf Accelerating Finite Field Arithmetic for Homomorphic Encryption on GPUs]====== | ||

| + | {{Nutshell|Optimized modulo "%" operator on NVIDIA V100 GPU to speedup Homomorphic Encryption.|title=Paper}} | ||

| + | |||

| + | ([https://ieeexplore.ieee.org/document/10068510 IEEE MICRO 2023]) [[https://wiki.kaustubh.us/w/img_auth.php/FHE_IEEE_Micro.pdf PDF]] [[https://www.researchgate.net/publication/369197973_Accelerating_Finite_Field_Arithmetic_for_Homomorphic_Encryption_on_GPUs RG]] | ||

| + | ==.== | ||

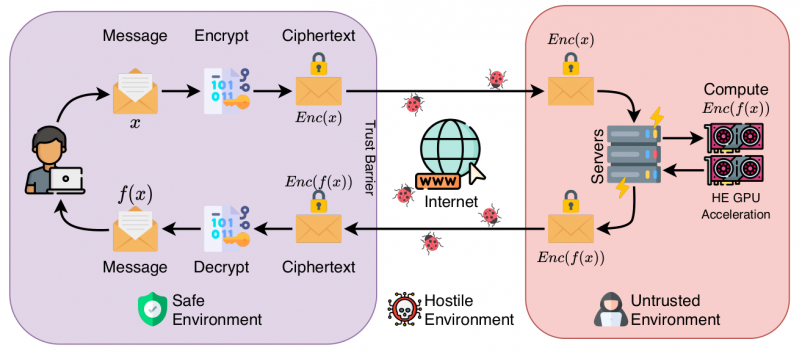

| + | [[File:Lady Bug.png|right|frameless|111x111px]] | ||

| − | == | + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/FHE_SEED_2022.pdf Accelerating Polynomial Multiplication for Homomorphic Encryption on GPUs]'''====== |

| − | [ | + | {{Nutshell|Incorporated NVIDIA V100's shared memory to accelerate Homomorphic Encryption.|title=Paper}} |

| − | + | ([https://seed22.engr.uconn.edu/ SEED 2022]) [[https://wiki.kaustubh.us/w/img_auth.php/FHE_SEED_2022.pdf PDF]][[https://wiki.kaustubh.us/w/img_auth.php/FHE_SEED_2022_Slides.pdf Slides]] | |

| − | ([https://seed22.engr.uconn.edu/ SEED 2022]) [[https://wiki.kaustubh.us/w/img_auth.php/ | + | [[https://www.researchgate.net/publication/363332393_Accelerating_Polynomial_Multiplication_for_Homomorphic_Encryption_on_GPUs RG]] |

{| class="wikitable mw-collapsible mw-collapsed" | {| class="wikitable mw-collapsible mw-collapsed" | ||

| Line 118: | Line 184: | ||

|} | |} | ||

| + | ==.== | ||

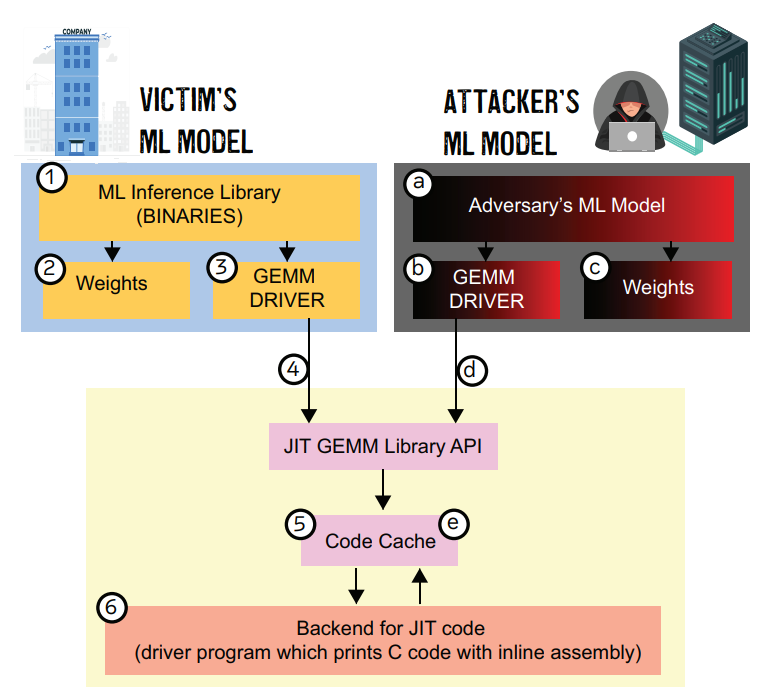

| + | [[File:Hacker icon.png|right|frameless|90x90px]] | ||

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/JAXED_Reverse_Engineering_DNN_Architectures_Leveraging_JIT_GEMM_Libraries.pdf JAXED: Reverse Engineering DNN Architectures Leveraging JIT GEMM Libraries]'''====== | ||

| + | {{Nutshell|Exposed software cache's vulnerability with a side-channel attack.|title=JAXED}} | ||

| − | + | ([https://www.seed-symposium.org/2021/index.html SEED 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/JAXED_Reverse_Engineering_DNN_Architectures_Leveraging_JIT_GEMM_Libraries.pdf PDF]] [[https://wiki.kaustubh.us/w/img_auth.php/JAXED_Slides.pdf Slides]] [[https://wiki.kaustubh.us/w/img_auth.php/jaxed_poster.pdf Poster]] | |

| − | + | [[https://www.researchgate.net/publication/355356425_JAXED_Reverse_Engineering_DNN_Architectures_Leveraging_JIT_GEMM_Libraries RG]] | |

| − | |||

| − | |||

| − | |||

| − | ([https://www.seed-symposium.org/2021/index.html SEED 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/JAXED_Reverse_Engineering_DNN_Architectures_Leveraging_JIT_GEMM_Libraries.pdf PDF]] [[https://wiki.kaustubh.us/w/img_auth.php/jaxed_poster.pdf Poster]] | ||

{| class="wikitable mw-collapsible mw-collapsed" | {| class="wikitable mw-collapsible mw-collapsed" | ||

| Line 142: | Line 208: | ||

|} | |} | ||

| + | ==.== | ||

| + | [[File:Mini GNN.png|right|frameless|98x98px]] | ||

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/GNNMark.pdf GNNMark: A benchmark suite to characterize graph neural network training on GPUs]'''====== | ||

| + | {{Nutshell|Created a standardized suite of GNN workloads to benchmark GPUs.|title=GNNMark}} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

([https://ispass.org/ispass2021/ ISPASS 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/GNNMark.pdf PDF]] | ([https://ispass.org/ispass2021/ ISPASS 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/GNNMark.pdf PDF]] | ||

| + | [[https://www.researchgate.net/publication/350159043_GNNMark_A_Benchmark_Suite_to_Characterize_Graph_Neural_Network_Training_on_GPUs RG]] | ||

{| class="wikitable mw-collapsible mw-collapsed" | {| class="wikitable mw-collapsible mw-collapsed" | ||

| Line 166: | Line 232: | ||

|} | |} | ||

| + | ==.== | ||

| + | [[File:Core Image SMASH.png|right|frameless|45x45px]] | ||

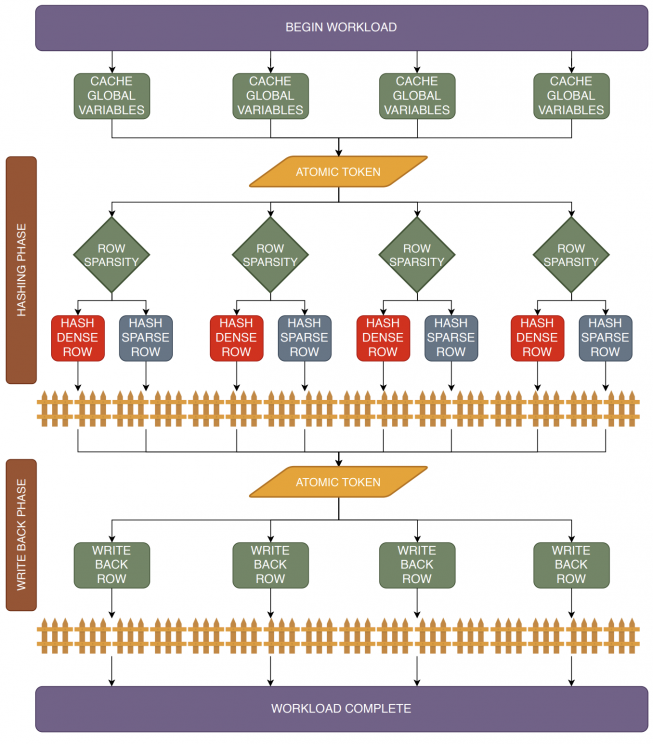

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/SMASH_Thesis.pdf SMASH: Sparse Matrix Atomic Scratchpad Hashing]'''====== | ||

| + | {{Nutshell|Optimized SpGEMM for Intel's PIUMA architecture.|title=SMASH}} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

([https://www.proquest.com/docview/2529815748?pq-origsite=gscholar&fromopenview=true MS Thesis, 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/SMASH_Thesis.pdf PDF]] | ([https://www.proquest.com/docview/2529815748?pq-origsite=gscholar&fromopenview=true MS Thesis, 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/SMASH_Thesis.pdf PDF]] | ||

| + | [[https://www.researchgate.net/publication/352018010_SMASH_Sparse_Matrix_Atomic_Scratchpad_Hashing RG]] | ||

{| class="wikitable mw-collapsible mw-collapsed" | {| class="wikitable mw-collapsible mw-collapsed" | ||

| Line 192: | Line 258: | ||

|} | |} | ||

| + | ==.== | ||

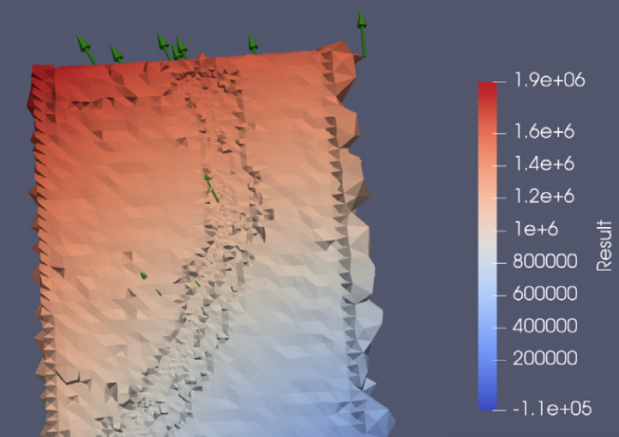

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/SC_18_Cluster_Contest_Paper.pdf Student cluster competition 2018, team northeastern university: Reproducing performance of a multi-physics simulations of the Tsunamigenic 2004 Sumatra Megathrust earthquake on the AMD EPYC 7551 architecture]'''====== | ||

| + | {{Nutshell|Optimizing earthquake simulation workload on AMD CPUs.|title=Paper}} | ||

| − | |||

| − | |||

| − | |||

| − | |||

([https://sc18.supercomputing.org/ SC 2018]) [[https://wiki.kaustubh.us/w/img_auth.php/SC_18_Cluster_Contest_Paper.pdf PDF]] | ([https://sc18.supercomputing.org/ SC 2018]) [[https://wiki.kaustubh.us/w/img_auth.php/SC_18_Cluster_Contest_Paper.pdf PDF]] | ||

| + | [[https://www.researchgate.net/publication/336659232_Student_Cluster_Competition_2018_Team_Northeastern_University_Reproducing_Performance_of_a_Multi-Physics_Simulations_of_the_Tsunamigenic_2004_Sumatra_Megathrust_Earthquake_on_the_AMD_EPYC_7551_Archite RG]] | ||

{| class="wikitable mw-collapsible mw-collapsed" | {| class="wikitable mw-collapsible mw-collapsed" | ||

|+Abstract | |+Abstract | ||

| Line 211: | Line 277: | ||

|} | |} | ||

| + | ==.== | ||

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/BARC_speeding.pdf Speeding up DNNs using HPL based Fine-grained Tiling for Distributed Multi-GPU Training]'''====== | ||

| + | {{Nutshell|Evaluating the cluster equipped with NVIDIA GPUs using the Linpack benchmark.|title=Paper}} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

([https://bostonarch.github.io/2018/ BARC 2018]) [[https://wiki.kaustubh.us/w/img_auth.php/BARC_speeding.pdf PDF]] | ([https://bostonarch.github.io/2018/ BARC 2018]) [[https://wiki.kaustubh.us/w/img_auth.php/BARC_speeding.pdf PDF]] | ||

| + | [[https://www.researchgate.net/publication/357766887_Speeding_up_DNNs_using_HPL_based_Fine-grained_Tiling_for_Distributed_Multi-GPU_Training RG]] | ||

| + | ==.== | ||

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/Video_Steganography.pdf Video steganography using encrypted payload for satellite communication]'''====== | ||

| + | {{Nutshell|Concealing secret messages in videos.|title=Paper}} | ||

| − | |||

| − | |||

| − | |||

| − | |||

([https://2017.aeroconf.org/ Aerospace Conference 2017]) [[https://wiki.kaustubh.us/w/img_auth.php/Video_Steganography.pdf PDF]] | ([https://2017.aeroconf.org/ Aerospace Conference 2017]) [[https://wiki.kaustubh.us/w/img_auth.php/Video_Steganography.pdf PDF]] | ||

| + | [[https://www.researchgate.net/publication/317702110_Video_steganography_using_encrypted_payload_for_satellite_communication RG]] | ||

| + | ==.== | ||

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/missing_middle.pdf Missing 'Middle Scenarios' Uncovering Nuanced Conditions in Latin America's Housing Crisis]'''====== | ||

| + | {{Nutshell|Integrating LSTM models with Latin American housing data to devise solutions.|title=Paper}} | ||

| − | + | ([https://www.huduser.gov/portal/periodicals/cityscpe/vol19num2/article3.html Cityscape 2017]) [[https://wiki.kaustubh.us/w/img_auth.php/missing_middle.pdf PDF]] | |

| − | + | [[https://www.researchgate.net/publication/361864952_Missing_Middle_Scenarios_Uncovering_Nuanced_Conditions_in_Latin_America's_Housing_Crisis RG]] | |

| − | |||

| − | |||

| − | ([https://www.huduser.gov/portal/periodicals/cityscpe/vol19num2/article3.html Cityscape 2017]) [PDF] | ||

| − | |||

| − | |||

| − | |||

| + | ==.== | ||

| − | + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/dynamic_power.pdf Dynamic power allocation using Stackelberg game in a wireless sensor network]'''====== | |

| + | {{Nutshell|Employing game theory for power distribution models.|title=Paper}} | ||

| − | + | ([https://2016.aeroconf.org/ Aerospace Conference 2016]) [[https://wiki.kaustubh.us/w/img_auth.php/dynamic_power.pdf PDF]] | |

| − | ([https://2016.aeroconf.org/ Aerospace Conference 2016]) [PDF] | + | [[https://www.researchgate.net/publication/294873909_Dynamic_Power_Allocation_using_Stackelberg_Game_in_a_Wireless_Sensor_Network RG]] |

| + | ==.== | ||

| + | ======'''[https://wiki.kaustubh.us/w/img_auth.php/automatic_image.pdf Automatic image annotation using a hybrid engine]'''====== | ||

| + | {{Nutshell|A hybrid engine that merges feature extraction with language models.|title=Paper}} | ||

| − | + | ([https://ieeexplore.ieee.org/xpl/conhome/7438527/proceeding Indicon 2015]) [[https://wiki.kaustubh.us/w/img_auth.php/automatic_image.pdf PDF]] | |

| + | [[https://www.researchgate.net/publication/294874083_Automatic_Image_Annotation_using_a_Hybrid_Engine RG]] | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<br /> | <br /> | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

| + | <br /><br /> | ||

====Posters==== | ====Posters==== | ||

| + | *FHE [[https://wiki.kaustubh.us/w/img_auth.php/FHE_IUCRC_Poster.pdf PDF]] | ||

*JAXED [[https://wiki.kaustubh.us/w/img_auth.php/jaxed_poster.pdf PDF]] | *JAXED [[https://wiki.kaustubh.us/w/img_auth.php/jaxed_poster.pdf PDF]] | ||

*Pi-Tiles (Graduate Innovator Award) [[https://wiki.kaustubh.us/w/img_auth.php/Pi_Tiles.pdf PDF]] | *Pi-Tiles (Graduate Innovator Award) [[https://wiki.kaustubh.us/w/img_auth.php/Pi_Tiles.pdf PDF]] | ||

*The Prime Hexagon (Best Poster Award) [[https://wiki.kaustubh.us/w/img_auth.php/Prime_Hexagon.pdf PDF]] | *The Prime Hexagon (Best Poster Award) [[https://wiki.kaustubh.us/w/img_auth.php/Prime_Hexagon.pdf PDF]] | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<br /> | <br /> | ||

<br /> | <br /> | ||

Latest revision as of 08:19, 30 April 2024

Hi, I am Kaustubh, a Ph.D. candidate studying computer engineering in NUCAR lab at Northeastern University with my advisor David Kaeli. My research focuses on designing hardware accelerators for sparse graph workloads.

My expertise lies in:

- Computer Architecture Simulator Design

- Graph Neural Network Accelerators

- Sparse Matrix Accelerators

- Homomorphic Encryption Accelerators

- GPU Kernel Design

Contact:

- shivdikar.k [at] northeastern [dot] edu

- mail [at] kaustubh [dot] us

[Resume]

Education

- PhD - Computer Engineering, Northeastern University [May 2024]

- MS - Electrical and Computer Engineering, Northeastern University [May 2021]

- BS - Electrical Engineering, Veermata Jijabai Technological Institute [May 2016]

Work

- Summer-Fall 2020 Coop: Parallel Computing Lab @ Intel Labs with Fabrizio Petrini.

- Summer-Fall 2019 Coop: Parallel Computing Lab @ Intel Labs with Fabrizio Petrini.

- Summer-Fall 2018 Coop: Mobile Robotics @ Omron Adept with George Paul.

Recent News

- June 2022: Mentored Lina Adkins for the GNN Acceleration project at REU-Pathways program

- May 2022: Served as Submission co-chair for HPCA 2023 conference.

- Jan 2020: Taught the GPU Programming Course at NEU

- April 2019: Graduate Innovator Award at the RISE 2019 Research Expo for our poster Pi-Tiles

- April 2018: Best Poster Award at the RISE 2018 Research Expo for our poster The Prime Hexagon

- Nov 2018: Mentored the NEU team for Student Cluster Contest at Super Computing Conference 2018

- Nov 2017: Joined the NEU Team for Student Cluster Contest at Super Computing Conference 2017

Publications

NeuraChip: Accelerating GNN Computations with a Hash-based Decoupled Spatial Accelerator

NeuraChip in a nutshell: Decoupled multiplication and addition operations to speedup GNNs. |

(ISCA 2024) [PDF] [RG] [Slides] [GitHub] [Website]

.

Enabling Accelerators for Graph Computing

Thesis in a nutshell: Software and Hardware enhancements for GNNs |

(Ph.D. Thesis) [PDF]

.

Scalability Limitations of Processing-in-Memory using Real System Evaluations

Paper in a nutshell: Suggest interconnects for PIM nodes to enable efficient all-to-all communication. |

(ACM SIGMETRICS 2024) [PDF] [RG]

.

MaxK-GNN: Towards Theoretical Speed Limits for Accelerating Graph Neural Networks Training

Paper in a nutshell: Presented MaxK non-linearity as a universal approximator to speedup GNN training |

(ASPLOS 2024) [PDF] [GitHub] [RG]

.

GME: GPU-based Microarchitectural Extensions to Accelerate Homomorphic Encryption

GME in a nutshell: Enhanced the AMD MI-100 GPU on-chip network to accelerate Homomorphic Encryption. |

(IEEE/ACM MICRO 2023) [PDF] [RG] [Slides]

| Abstract |

|---|

| Fully Homomorphic Encryption (FHE) enables the processing of encrypted data without decrypting it.

FHE has garnered significant attention over the past decade as it supports secure outsourcing of data processing to remote cloud services. Despite its promise of strong data privacy and security guarantees, FHE introduces a slowdown of up to five orders of magnitude as compared to the same computation using plaintext data. This overhead is presently a major barrier to the commercial adoption of FHE. In this work, we leverage GPUs to accelerate FHE, capitalizing on a well-established GPU ecosystem available in the cloud. We propose GME, which combines three key microarchitectural extensions along with a compile-time optimization to the current AMD CDNA GPU architecture. First, GME integrates a lightweight on-chip compute unit (CU)-side hierarchical interconnect to retain ciphertext in cache across FHE kernels, thus eliminating redundant memory transactions. Second, to tackle compute bottlenecks, GME introduces special MOD-units that provide native custom hardware support for modular reduction operations, one of the most commonly executed sets of operations in FHE. Third, by integrating the MOD-unit with our novel pipelined 64-bit integer arithmetic cores (WMAC-units), GME further accelerates FHE workloads by 19%. Finally, we propose a Locality-Aware Block Scheduler (LABS) that exploits the temporal locality available in FHE primitive blocks. Incorporating these microarchitectural features and compiler optimizations, we create a synergistic approach achieving average speedups of 796x, 14.2x, and 2.3x over Intel Xeon CPU, NVIDIA V100 GPU, and Xilinx FPGA implementations, respectively. |

|

| Authors: Kaustubh Shivdikar, Yuhui Bao, Rashmi Agrawal, Michael Shen, Gilbert Jonatan, Evelio Mora, Alexander Ingare, Neal Livesay, José L. Abellán, John Kim, Ajay Joshi, David Kaeli |

.

Accelerating Finite Field Arithmetic for Homomorphic Encryption on GPUs

Paper in a nutshell: Optimized modulo "%" operator on NVIDIA V100 GPU to speedup Homomorphic Encryption. |

(IEEE MICRO 2023) [PDF] [RG]

.

Accelerating Polynomial Multiplication for Homomorphic Encryption on GPUs

Paper in a nutshell: Incorporated NVIDIA V100's shared memory to accelerate Homomorphic Encryption. |

(SEED 2022) [PDF][Slides] [RG]

| Abstract |

|---|

| Homomorphic Encryption (HE) enables users to securely outsource both the storage and computation of sensitive data to untrusted servers. Not only does FHE offer an attractive solution for security in cloud systems, but lattice-based FHE systems are also believed to be resistant to attacks by quantum computers. However, current FHE implementations suffer from prohibitively high latency. For lattice-based FHE to become viable for real-world systems, it is necessary for the key bottlenecks---particularly polynomial multiplication---to be highly efficient.

In this paper, we present a characterization of GPU-based implementations of polynomial multiplication. We begin with a survey of modular reduction techniques and analyze several variants of the widely-used Barrett modular reduction algorithm. We then propose a modular reduction variant optimized for 64-bit integer words on the GPU, obtaining a 1.8x speedup over the existing comparable implementations.

|

FHE protects against network insecurities in untrusted cloud services, enabling users to securely offload sensitive data |

| Authors: Kaustubh Shivdikar, Gilbert Jonatan, Evelio Mora, Neal Livesay, Rashmi Agrawal, Ajay Joshi, José L. Abellán, John Kim, David Kaeli |

.

JAXED: Reverse Engineering DNN Architectures Leveraging JIT GEMM Libraries

JAXED in a nutshell: Exposed software cache's vulnerability with a side-channel attack. |

(SEED 2021) [PDF] [Slides] [Poster] [RG]

| Abstract |

|---|

| General matrix multiplication (GEMM) libraries on x86 architectures have recently adopted Just-in-time (JIT) based optimizations to dramatically reduce the execution time of small and medium-sized matrix multiplication. The exploitation of the latest CPU architectural extensions, such as the AVX2 and AVX-512 extensions, are the target for these optimizations. Although JIT compilers can provide impressive speedups to GEMM libraries, they expose a new attack surface through the built-in JIT code caches. These software-based caches allow an adversary to extract sensitive information through carefully designed timing attacks. The attack surface of such libraries has become more prominent due to their widespread integration into popular Machine Learning (ML) frameworks such as PyTorch and Tensorflow.

|

Attack Surface: After the victim’s execution, the victim leaves behind information about its model hyperparameters in the JIT code cache. The attacker probes this JIT code cache through the attacker’s ML model and observes timing information to determine the victim’s model hyperparameters. |

| Authors: Malith Jayaweera, Kaustubh Shivdikar, Yanzhi Wang, David Kaeli |

.

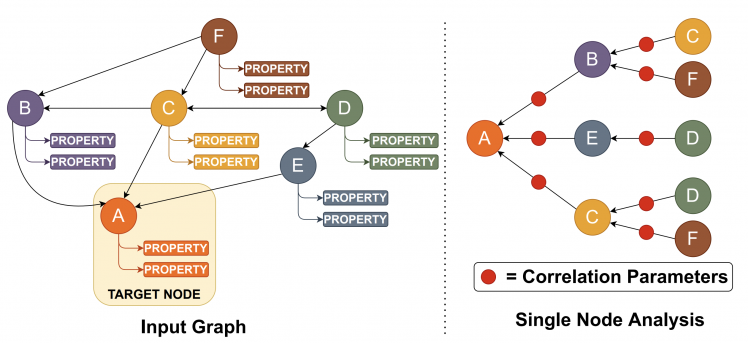

GNNMark: A benchmark suite to characterize graph neural network training on GPUs

GNNMark in a nutshell: Created a standardized suite of GNN workloads to benchmark GPUs. |

(ISPASS 2021) [PDF] [RG]

| Abstract |

|---|

| Graph Neural Networks (GNNs) have emerged as a promising class of Machine Learning algorithms to train on non-euclidean data. GNNs are widely used in recommender systems, drug discovery, text understanding, and traffic forecasting. Due to the energy efficiency and high-performance capabilities of GPUs, GPUs are a natural choice for accelerating the training of GNNs. Thus, we want to better understand the architectural and system level implications of training GNNs on GPUs. Presently, there is no benchmark suite available designed to study GNN training workloads.

|

Graph Neural Network Analysis |

| Authors: Trinayan Baruah, Kaustubh Shivdikar, Shi Dong, Yifan Sun, Saiful A Mojumder, Kihoon Jung, José L. Abellán, Yash Ukidave, Ajay Joshi, John Kim, David Kaeli |

.

SMASH: Sparse Matrix Atomic Scratchpad Hashing

SMASH in a nutshell: Optimized SpGEMM for Intel's PIUMA architecture. |

(MS Thesis, 2021) [PDF] [RG]

.

Student cluster competition 2018, team northeastern university: Reproducing performance of a multi-physics simulations of the Tsunamigenic 2004 Sumatra Megathrust earthquake on the AMD EPYC 7551 architecture

Paper in a nutshell: Optimizing earthquake simulation workload on AMD CPUs. |

.

Speeding up DNNs using HPL based Fine-grained Tiling for Distributed Multi-GPU Training

Paper in a nutshell: Evaluating the cluster equipped with NVIDIA GPUs using the Linpack benchmark. |

.

Video steganography using encrypted payload for satellite communication

Paper in a nutshell: Concealing secret messages in videos. |

(Aerospace Conference 2017) [PDF] [RG]

.

Missing 'Middle Scenarios' Uncovering Nuanced Conditions in Latin America's Housing Crisis

Paper in a nutshell: Integrating LSTM models with Latin American housing data to devise solutions. |

(Cityscape 2017) [PDF] [RG]

.

Dynamic power allocation using Stackelberg game in a wireless sensor network

Paper in a nutshell: Employing game theory for power distribution models. |

(Aerospace Conference 2016) [PDF] [RG]

.

Automatic image annotation using a hybrid engine

Paper in a nutshell: A hybrid engine that merges feature extraction with language models. |

(Indicon 2015) [PDF] [RG]

Posters

- FHE [PDF]

- JAXED [PDF]

- Pi-Tiles (Graduate Innovator Award) [PDF]

- The Prime Hexagon (Best Poster Award) [PDF]

What is KTB Wiki?

KTB Wiki, because the best way to store your knowledge is in an indexed SQL database.

This website was built on KTB Wiki. KTB wiki is my side project/attempt to consolidate knowledge gained during my Ph.D. journey. Though many other platforms provide similar service, the process of creating KTB Wiki was a learning experience since it taught me concepts of indexing, load balancing, and in-memory file systems. KTB Wiki was built using MediaWiki and is intended for research purposes only.

Interesting Reads