Difference between revisions of "Kaustubh Shivdikar"

m (Duplicate images removed) (Tag: Visual edit) |

m (WIP Added) (Tag: Visual edit) |

||

| Line 20: | Line 20: | ||

====Education==== | ====Education==== | ||

| − | *PhD - Compuer Engineering, Northeastern University [Expected Fall | + | *PhD - Compuer Engineering, Northeastern University [Expected Fall 2023] |

*MS - Electrical and Computer Engineering, Northeastern University [May 2021] | *MS - Electrical and Computer Engineering, Northeastern University [May 2021] | ||

*BS - Electrical Engineering, Veermata Jijabai Technological Institute [May 2016] | *BS - Electrical Engineering, Veermata Jijabai Technological Institute [May 2016] | ||

| Line 104: | Line 104: | ||

======'''GNNMark: A benchmark suite to characterize graph neural network training on GPUs'''====== | ======'''GNNMark: A benchmark suite to characterize graph neural network training on GPUs'''====== | ||

| − | ([https://ispass.org/ispass2021/ ISPASS 2021]) [PDF] | + | ([https://ispass.org/ispass2021/ ISPASS 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/GNNMark.pdf PDF]] |

*[https://www.linkedin.com/in/trinayan-baruah-30/ Trinayan Baruah], '''[[Kaustubh Shivdikar]]''', [https://www.linkedin.com/in/shi-dong-neu/ Shi Dong], [https://syifan.github.io/ Yifan Sun], [https://www.linkedin.com/in/saiful-mojumder/ Saiful A Mojumder], [https://scholar.google.com/citations?user=do5tcEAAAAAJ&hl=en Kihoon Jung], [https://sites.google.com/ucam.edu/jlabellan/ José L. Abellán], [https://www.linkedin.com/in/ukidaveyash/ Yash Ukidave], [https://www.bu.edu/eng/profile/ajay-joshi/ Ajay Joshi], [http://icn.kaist.ac.kr/~jjk12/ John Kim], [https://ece.northeastern.edu/fac-ece/kaeli.html David Kaeli] | *[https://www.linkedin.com/in/trinayan-baruah-30/ Trinayan Baruah], '''[[Kaustubh Shivdikar]]''', [https://www.linkedin.com/in/shi-dong-neu/ Shi Dong], [https://syifan.github.io/ Yifan Sun], [https://www.linkedin.com/in/saiful-mojumder/ Saiful A Mojumder], [https://scholar.google.com/citations?user=do5tcEAAAAAJ&hl=en Kihoon Jung], [https://sites.google.com/ucam.edu/jlabellan/ José L. Abellán], [https://www.linkedin.com/in/ukidaveyash/ Yash Ukidave], [https://www.bu.edu/eng/profile/ajay-joshi/ Ajay Joshi], [http://icn.kaist.ac.kr/~jjk12/ John Kim], [https://ece.northeastern.edu/fac-ece/kaeli.html David Kaeli] | ||

| Line 128: | Line 128: | ||

======'''SMASH: Sparse Matrix Atomic Scratchpad Hashing'''====== | ======'''SMASH: Sparse Matrix Atomic Scratchpad Hashing'''====== | ||

| − | ([https://www.proquest.com/docview/2529815748?pq-origsite=gscholar&fromopenview=true MS Thesis, 2021]) [PDF] | + | ([https://www.proquest.com/docview/2529815748?pq-origsite=gscholar&fromopenview=true MS Thesis, 2021]) [[https://wiki.kaustubh.us/w/img_auth.php/SMASH_Thesis.pdf PDF]] |

| + | |||

| + | {| class="wikitable mw-collapsible mw-collapsed" | ||

| + | |+Abstract | ||

| + | !Abstract | ||

| + | |- | ||

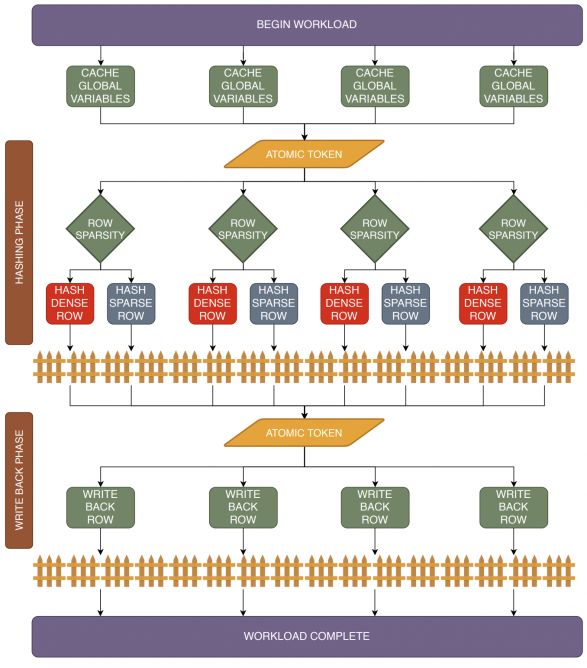

| + | |Sparse matrices, more specifically Sparse Matrix-Matrix Multiply (SpGEMM) kernels, are commonly found in a wide range of applications, spanning graph-based path-finding to machine learning algorithms (e.g., neural networks). A particular challenge in implementing SpGEMM kernels has been the pressure placed on DRAM memory. One approach to tackle this problem is to use an inner product method for the SpGEMM kernel implementation. While the inner product produces fewer intermediate results, it can end up saturating the memory bandwidth, given the high number of redundant fetches of the input matrix elements. Using an outer product-based SpGEMM kernel can reduce redundant fetches, but at the cost of increased overhead due to extra computation and memory accesses for producing/managing partial products. | ||

| + | |||

| + | |||

| + | In this thesis, we introduce a novel SpGEMM kernel implementation based on the row-wise product approach. We leverage atomic instructions to merge intermediate partial products as they are generated. The use of atomic instructions eliminates the need to create partial product matrices, thus eliminating redundant DRAM fetches. | ||

| + | |||

| + | To evaluate our row-wise product approach, we map an optimized SpGEMM kernel to a custom accelerator designed to accelerate graph-based applications. The targeted accelerator is an experimental system named PIUMA, being developed by Intel. PIUMA provides several attractive features, including fast context switching, user-configurable caches, globally addressable memory, non-coherent caches, and asynchronous pipelines. We tailor our SpGEMM kernel to exploit many of the features of the PIUMA fabric. | ||

| + | |||

| + | This thesis compares our SpGEMM implementation against prior solutions, all mapped to the PIUMA framework. We briefly describe some of the PIUMA architecture features and then delve into the details of our optimized SpGEMM kernel. Our SpGEMM kernel can achieve 9.4x speedup as compared to competing approaches. | ||

| + | |- | ||

| + | |[[File:SMASH Algorithm.png|669x669px]] | ||

| + | The SMASH Algorithm | ||

| + | |} | ||

| Line 136: | Line 153: | ||

======'''Student cluster competition 2018, team northeastern university: Reproducing performance of a multi-physics simulations of the Tsunamigenic 2004 Sumatra Megathrust earthquake on the AMD EPYC 7551 architecture'''====== | ======'''Student cluster competition 2018, team northeastern university: Reproducing performance of a multi-physics simulations of the Tsunamigenic 2004 Sumatra Megathrust earthquake on the AMD EPYC 7551 architecture'''====== | ||

| − | ([https://sc18.supercomputing.org/ SC 2018]) | + | ([https://sc18.supercomputing.org/ SC 2018]) [PDF] |

| Line 145: | Line 162: | ||

======'''Speeding up DNNs using HPL based Fine-grained Tiling for Distributed Multi-GPU Training'''====== | ======'''Speeding up DNNs using HPL based Fine-grained Tiling for Distributed Multi-GPU Training'''====== | ||

| − | ([https://bostonarch.github.io/2018/ BARC 2018]) | + | ([https://bostonarch.github.io/2018/ BARC 2018]) [PDF] |

| Line 153: | Line 170: | ||

======'''Video steganography using encrypted payload for satellite communication'''====== | ======'''Video steganography using encrypted payload for satellite communication'''====== | ||

| − | ([https://2017.aeroconf.org/ Aerospace Conference 2017]) | + | ([https://2017.aeroconf.org/ Aerospace Conference 2017]) [PDF] |

| Line 161: | Line 178: | ||

======'''Missing'Middle Scenarios' Uncovering Nuanced Conditions in Latin America's Housing Crisis'''====== | ======'''Missing'Middle Scenarios' Uncovering Nuanced Conditions in Latin America's Housing Crisis'''====== | ||

| − | ([https://www.huduser.gov/portal/periodicals/cityscpe/vol19num2/article3.html Cityscape 2017]) | + | ([https://www.huduser.gov/portal/periodicals/cityscpe/vol19num2/article3.html Cityscape 2017]) [PDF] |

| Line 170: | Line 187: | ||

======'''Dynamic power allocation using Stackelberg game in a wireless sensor network'''====== | ======'''Dynamic power allocation using Stackelberg game in a wireless sensor network'''====== | ||

| − | ([https://2016.aeroconf.org/ Aerospace Conference 2016]) | + | ([https://2016.aeroconf.org/ Aerospace Conference 2016]) [PDF] |

| Line 178: | Line 195: | ||

======'''Automatic image annotation using a hybrid engine'''====== | ======'''Automatic image annotation using a hybrid engine'''====== | ||

| − | ([https://ieeexplore.ieee.org/xpl/conhome/7438527/proceeding Indicon 2015]) | + | ([https://ieeexplore.ieee.org/xpl/conhome/7438527/proceeding Indicon 2015]) [PDF] |

Revision as of 01:44, 20 August 2022

I am a Ph.D. candidate studying in NUCAR lab at Northeastern University under the guidance of Dr. David Kaeli. My research focuses on designing hardware accelerators for sparse graph workloads.

My expertise lies in:

- Computer Architecture Simulator Design

- Graph Neural Network Accelerators

- Sparse Matrix Accelerators

- Homomorphic Encryption Accelerators

- GPU Kernel Design

Contact: shivdikar.k [at] northeastern [dot] edu, mail [at] kaustubh [dot] us

Education

- PhD - Compuer Engineering, Northeastern University [Expected Fall 2023]

- MS - Electrical and Computer Engineering, Northeastern University [May 2021]

- BS - Electrical Engineering, Veermata Jijabai Technological Institute [May 2016]

Work

- Summer-Fall 2020 Coop: Parallel Computing Lab @ Intel Labs with Fabrizio Petrini.

- Summer-Fall 2019 Coop: Parallel Computing Lab @ Intel Labs with Fabrizio Petrini.

- Summer-Fall 2018 Coop: Mobile Robotics @ Omron Adept with George Paul.

Recent News

- June 2022: Mentored Lina Adkins for the GNN Acceleration project at REU-Pathways program

- May 2022: Served as Submission chair for HPCA 2023 conference.

- Jan 2020: Taught the GPU Programming Course at NEU

- April 2019: Graduate Innovator Award at the RISE 2019 Research Expo for our poster Pi-Tiles

- April 2018: Best Poster Award at the RISE 2018 Research Expo for our poster The Prime Hexagon

- Nov 2018: Mentored the NEU team for Student Cluster Contest at Super Computing Conference 2018

- Nov 2017: Joined the NEU Team for Student Cluster Contest at Super Computing Conference 2017

Publications

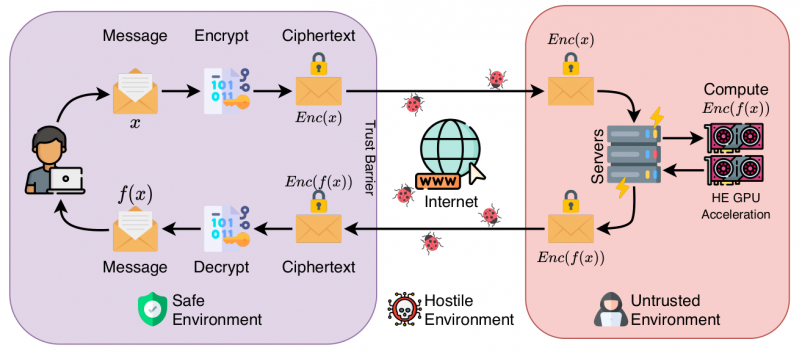

Accelerating Polynomial Multiplication for Homomorphic Encryption on GPUs

(SEED 2022) [PDF]

- Kaustubh Shivdikar, Gilbert Jonatan, Evelio Mora, Neal Livesay, Rashmi Agrawal, Ajay Joshi, José L. Abellán, John Kim, David Kaeli

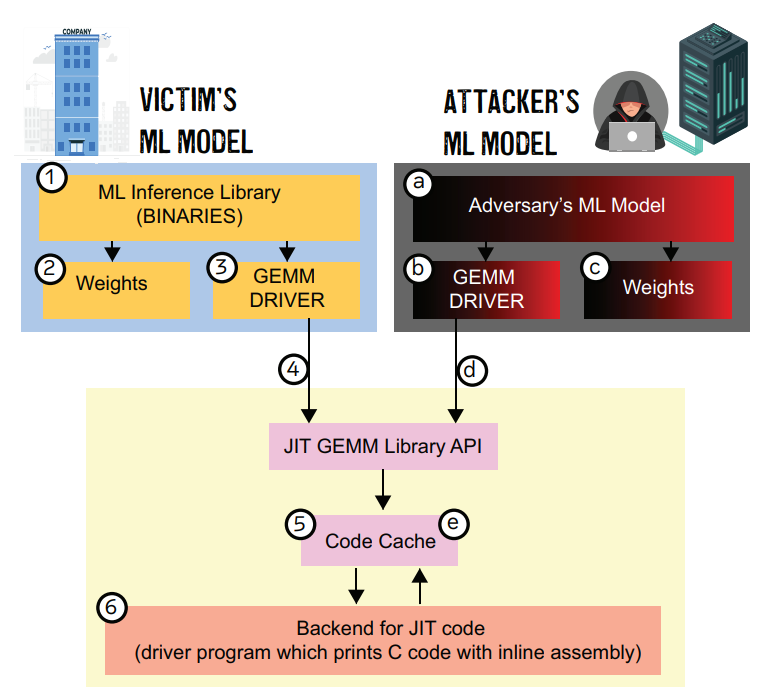

JAXED: Reverse Engineering DNN Architectures Leveraging JIT GEMM Libraries

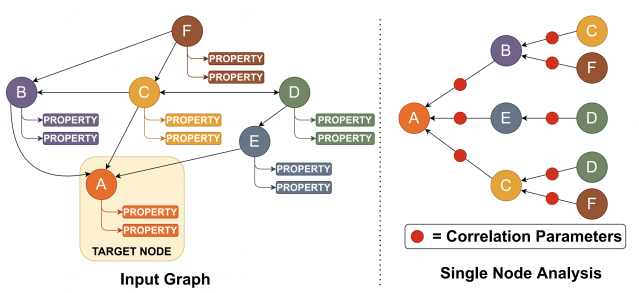

GNNMark: A benchmark suite to characterize graph neural network training on GPUs

(ISPASS 2021) [PDF]

- Trinayan Baruah, Kaustubh Shivdikar, Shi Dong, Yifan Sun, Saiful A Mojumder, Kihoon Jung, José L. Abellán, Yash Ukidave, Ajay Joshi, John Kim, David Kaeli

SMASH: Sparse Matrix Atomic Scratchpad Hashing

(MS Thesis, 2021) [PDF]

Student cluster competition 2018, team northeastern university: Reproducing performance of a multi-physics simulations of the Tsunamigenic 2004 Sumatra Megathrust earthquake on the AMD EPYC 7551 architecture

(SC 2018) [PDF]

Speeding up DNNs using HPL based Fine-grained Tiling for Distributed Multi-GPU Training

(BARC 2018) [PDF]

Video steganography using encrypted payload for satellite communication

(Aerospace Conference 2017) [PDF]

Missing'Middle Scenarios' Uncovering Nuanced Conditions in Latin America's Housing Crisis

(Cityscape 2017) [PDF]

Dynamic power allocation using Stackelberg game in a wireless sensor network

(Aerospace Conference 2016) [PDF]

Automatic image annotation using a hybrid engine

(Indicon 2015) [PDF]

Posters

- JAXED

- Pi-Tiles

- The Prime Hexagon

What is KTB Wiki?

KTB Wiki, because the best way to store your knowledge is in an indexed SQL database.

This website was built on KTB Wiki. KTB wiki is my side project/attempt to consolidate knowledge gained during my Ph.D. journey. Though many other platforms provide similar service, the process of creating KTB Wiki was a learning experience since it taught me concepts of indexing, load balancing, and in-memory file systems. KTB Wiki was built using MediaWiki and is intended for research purposes only.

Interesting Reads